Gradient descent algorithm for multiple linear regression

(i.e. for linear regression with multiple variables)

Terminology (notation used):

$n$ - this is the number of features (the number of input variables)

$X_1, X_2, \dots, X_n$ - these are the features

$X_j$ - the $j$-th feature ($j = 1,2,\dots,n$)

$Y$ - the target (output) variable

$m$ - the size of the training set (the number of training examples)

$X_j^{(i)}$ - the $i$-th training value from the $j$-th feature ($i = 1,2,\dots,m$)

Let's assume that we have the following features and training examples.

| # |

$X_1$ |

$X_2$ |

$X_3$ |

... |

$X_n$ |

$Y$ |

| 1 |

2100 |

5 |

1 |

... |

45 |

460 |

| 2 |

1400 |

3 |

2 |

... |

40 |

235 |

| 3 |

1530 |

3 |

2 |

... |

30 |

315 |

| ... |

... |

... |

... |

... |

... |

... |

| m |

850 |

2 |

1 |

... |

35 |

175 |

Here we have that

$X_1^{(3)} = 1530$, $X_2^{(2)} = 3$, $X_n^{(3)} = 30$, $X_n^{(m)} = 35$

$\overrightarrow{X}^{(i)}$ - this is a row vector of all $i$-th training values (basically the $i$-th row from the table above without the Y values), so this is the $i$-th training example

$\overrightarrow{X}^{(i)} = (X_1^{(i)}, X_2^{(i)}, \dots, X_n^{(i)})$, for $i=1,2,\dots,m$

Note: The training examples are the rows from the table above.

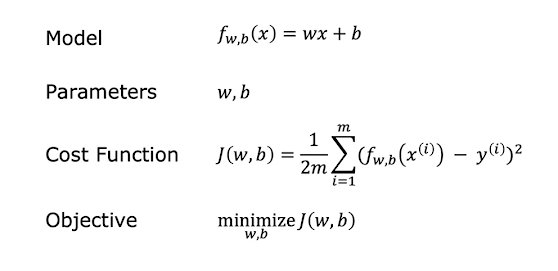

The linear regression model for a single feature was defined as follows.

$f_{w,b} = w \cdot x + b \tag{1}$

The linear regression model for multiple features is defined as follows.

$f_{w,b} = \sum_{j=1}^n w_j \cdot x_j + b \tag{2}$

We can rewrite this model in vector notation. Let us denote

$\overrightarrow {w} = (w_1, w_2, \dots, w_n)$ - this is a row vector of $n$ scalars (these are the weights assigned to the features)

$b$ - this is a scalar (a number), this value $b$ is the bias

$\overrightarrow {x} = (x_1, x_2, \dots, x_n)$ - this is a row vector of $n$ scalars (the input variables to our model)

Then for the multiple linear regression model we obtain

$f_{\overrightarrow{w},b} = \overrightarrow{w} \cdot \overrightarrow{x} + b \tag{3}$

Here $\overrightarrow{w}, \overrightarrow{b}$ are the parameters of the multiple linear regression model.

In this notation $\overrightarrow{w}\cdot \overrightarrow{x}$ is the dot product of the vectors $\overrightarrow{w}$ and $\overrightarrow{x}$ i.e.

$\overrightarrow{w} \cdot \overrightarrow{x} = \sum_{j=1}^n w_j \cdot x_j \tag{4}$

Note: This is called multiple linear regression, and not multivariate linear regression. The term multivariate linear regression in machine learning is used for something else.

For example in the table above $Y$ might be the price of a house (in 1000s of US dollars) which we are trying to estimate (i.e. build a model for), $X_1$ might be the size of the house in square feet, $X_2$ might be the number of bedrooms, $X_3$ might be the number of floors, and $X_n$ might be the age of the house in years (let us say n=4). Then one possible linear model for the price (Y) of the house could be as follows:

$f_{w,b}(x) = 0.1 \cdot x_1 + 4 \cdot x_2 + 10 \cdot x_3 + (-2) \cdot x_4 + 80 \tag{5}$

Here we have $b=80$, and this is called the base price.

We denote

$\hat{y}^{(j)} = f_{\overrightarrow{w}, b} (x^{(j)}) = \sum_{i=1}^n w_i \cdot x_i^{(j)} + b\tag{6}$

This value is the predicted value (by the linear regression model) which

corresponds to the $j$-th training example (here $j=1,2,\dots,m$).

We also have

$y^{(j)}$ - the actual value corresponding to the $j$-th training example ($j=1,2,\dots,m$).

The least mean squares (LMS) cost function is defined as follows

$$J(\overrightarrow{w},b) = J(w_1, w_2, \dots, w_n, b) = \frac{1}{2m}\sum_{i=1}^m (\hat{y}^{(i)} - y^{(i)})^2 \tag{7}$$

$$J(\overrightarrow{w},b) = \frac{1}{2m}\sum_{i=1}^m (f_{\overrightarrow{w},b}(\overrightarrow{x}^{(i)}) - y^{(i)})^2 \tag{8}$$

$$J(\overrightarrow{w},b) = \frac{1}{2m} \sum_{i=1}^m (w_1 x_1^{(i)} + w_2 x_2^{(i)} + \dots + w_n x_n^{(i)} + b - y ^ {(i)})^2 \tag{9}$$

So we get that

$$J(\overrightarrow{w},b) = \frac{1}{2m}\sum_{i=1}^m [ (\sum_{j=1}^n w_j \cdot x_j^{(i)}) + b - y^{(i)}]^2 \tag{10}$$

The gradient descent algorithm essentially varies the $w_j$ and $b$ parameters of the model to find the optimal i.e. the minimal value for $J$ (since $J$ is a function of the parameters $b$ and $w_j$, $j=1,2,\dots,n$).

So we compute

$$\frac{\partial{J}}{\partial{w_j}} = \frac{1}{m}\sum_{i=1}^m (f_{\overrightarrow{w},b}(\overrightarrow{x}^{(i)}) - y^{(i)}) \cdot x_j^{(i)} \tag{11}$$

(here $j=1,2,\dots,n$)

and

$$\frac{\partial{J}}{\partial{b}} = \frac{1}{m}\sum_{i=1}^m (f_{\overrightarrow{w},b}(\overrightarrow{x}^{(i)}) - y^{(i)}) \tag{12}$$

Now that we have formulas for these partial derivatives, the gradient descent algorithm for multiple linear regression goes as follows.

1. Pick a small positive number $\alpha \gt 0$.

2. Repeat these four actions until convergence

2.1.

$$temporary\_w_{j} = w_{j} - \alpha \cdot \frac{\partial{J}}{\partial{w_j}}$$ for $j=1,2,\dots,n$

(use the partial derivative expression from (11))

2.2.

$$temporary\_b = b - \alpha \cdot \frac{\partial{J}}{\partial{b}}$$

(use the partial derivative expression from (12))

2.3.

$$w_j = temporary\_w_j$$ for $j=1,2,\dots,n$

2.4.

$$b = temporary\_b$$

- The temporary variables here are used to underline the fact that the algorithm is doing a simultaneous update of the (n+1) parameters $w_1, w_2, \dots, w_n, b$. That means we should not change any of the parameters before we have computed the new values for all the parameters.

- Convergence means that the values of the (n+1) parameters $w_1, w_2, \dots, w_n, b$ have settled i.e. it means they all change by very little after certain number of iterations (say after K iterations/steps). To detect convergence we can use some sort of threshold e.g. we can assume the algorithm has converged if all parameters change only by less then 0.05% after certain number of iterations/steps.

This completes our description of the gradient descent algorithm for multiple linear regression.